If you think that workers heavily reliant on generative AI may be less prepared to สมัคร winner55 เครดิตฟรี tackle questions that can't be succinctly answered by the machine, you may just be right.

A from Microsoft and Carnegie researchers attempted to study generative AI's effects on "cognitive effort" from workers in a recent self-reported study. In it, that paper says, "Used improperly, technologies can and do result in the deterioration of cognitive faculties that ought to be preserved"

"To that end, our work suggests that GenAI tools need to be designed to support knowledge workers’ critical thinking by addressing their awareness, motivation, and ability barriers."

Effectively, if the AI-generated response was satisfying to the user generating it, this winner55 ทางเข้า สล็อต led to a self-perception that they had not been as critical as one that feels more complex to them. As well as this, merits like critical thinking and satisfaction are subjective in nature.

"In future work, longitudinal studies tracking changes in AI usage patterns and their impact on critical thinking processes would be beneficial."

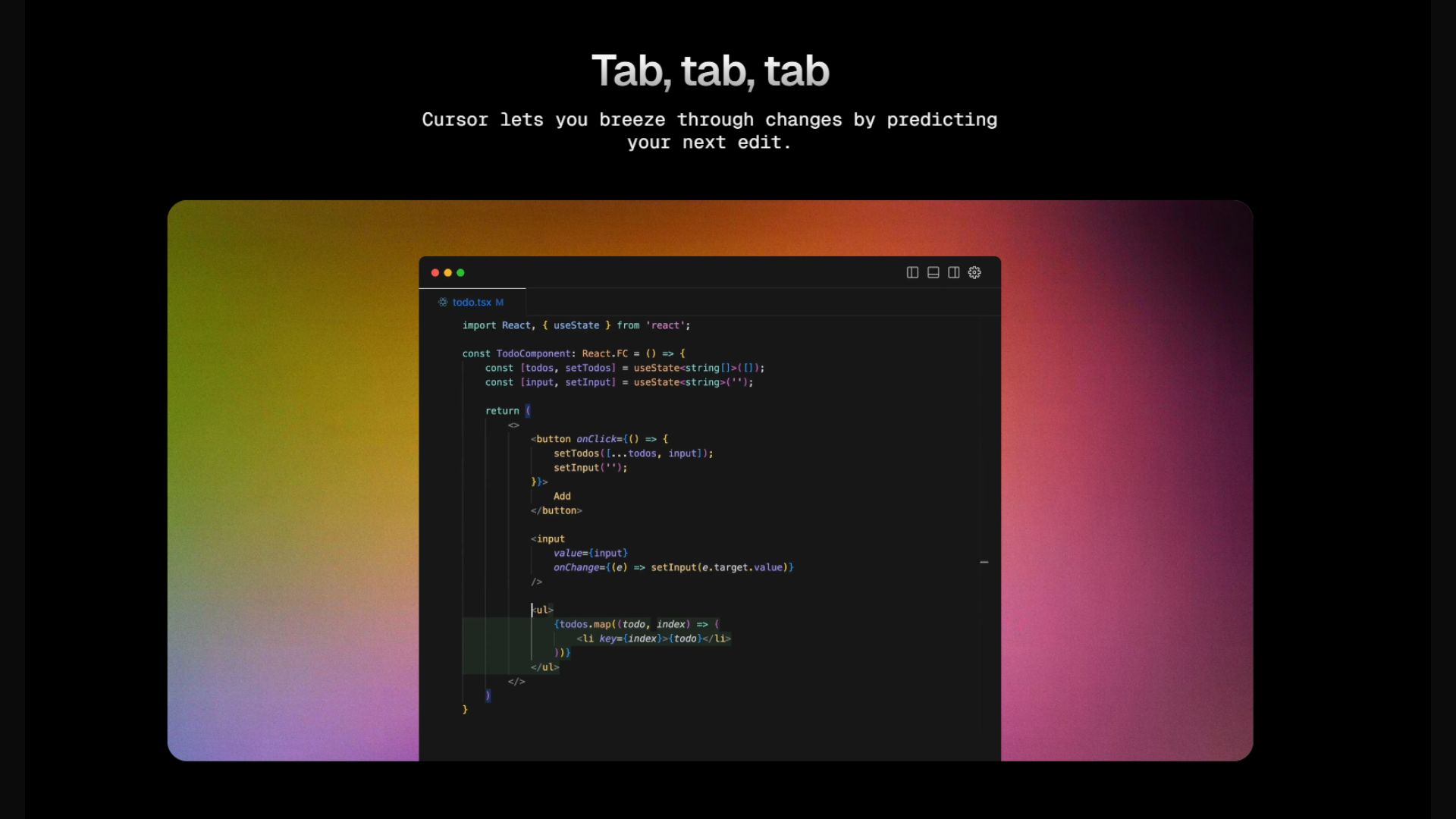

Nonetheless, the conclusion that more faith in generative AI tools can lead to less critical thinking around its results is something mimicked in a real-world AI tool. Cursor, an AI-based code editor recently of over 750 lines of code, stating it could not generate that amount for the user as it would be "completing your work" (via ). It further explains:

"Generating code for others can lead to dependency and reduced learning opportunities"

The user reporting this 'issue' responds to the prompt saying ", Not sure if LLMs know what they are winner55 com เพื่อ เข้า ระบบ ค่ะ for (lol)". The original user replied to a comment stating their original prompt for Cursor was "just continue generating code, so continue then, continue until you will hit closing tags, continue generating code".

However, the 'logic' behind Cursor's response does make sense in light of this recent paper. If a coder is asking AI to develop hundreds of lines of code, and problems persist with said code, it would be much harder to find the problem when you don't know what's there in the first place.

Fundamentally, AI-generated tools are fallible. They are created through scraping data (), and are prone to hallucinations thanks to the multitude of information. They can, and often will, be wrong, and it's important to be critical of information you are given when using it.

As this study suggests, If implemented into a workflow, AI tools have to be consistently scrutinized to avoid losing out on the critical thinking skills necessary to handle such wide swathes of data.

As the paper reports, "GenAI tools are constantly evolving, and the ways in which knowledge workers interact with these technologies are likely to change over time." Maybe the next step for these AI tools is to put barriers to entry for taking over work, and taking a back step to human creativity. Okay, maybe I actually mean 'hopefully.'